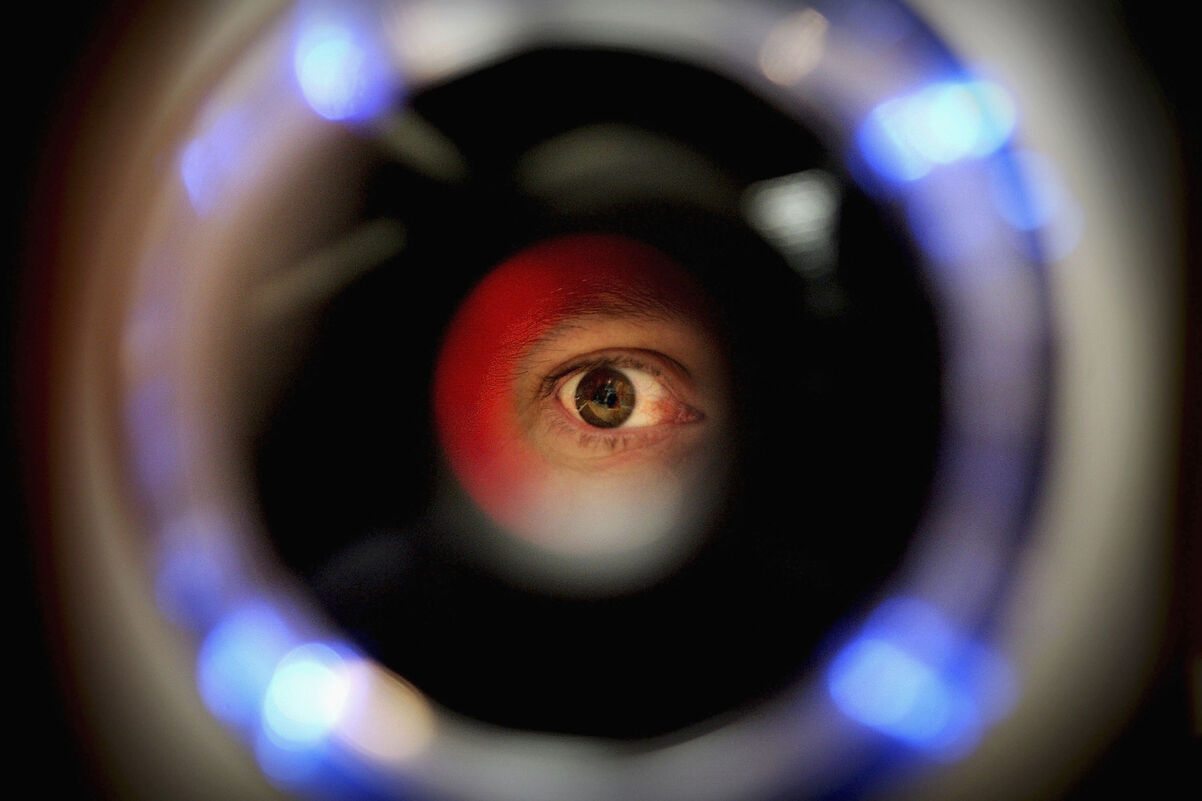

A controversial study on whether facial recognition software can detect “gayface” has been lambasted by LGBTQ advocacy groups, who warn it could be used to target queer people.

The study, which was published in the Journal of Personality and Social Psychology, claimed that artificial intelligence could be used to determine human sexuality with startling accuracy. Researchers from Stanford University used photos pulled from online dating sites. The technology correctly predicted the sexual orientation of male faces 81 percent of the time. It guessed whether women were lesbian or heterosexual with 74 percent exactitude.

The Human Rights Campaign and GLAAD were horrified by the results, condemning the study’s findings shortly after it was first reported in The Economist.

“Imagine for a moment the potential consequences if this flawed research were used to support a brutal regime’s efforts to identify and/or persecute people they believed to be gay,” says HRC Director of Public Education and Research Ashland Johnson in a public statement. She adds that the study is “dangerously flawed” and makes countless people’s “lives worse and less safe than before.”

Chechnya, a relatively independent territory in Russia, has made headlines in recent months after police began rounding up men suspected to be gay. The country’s LGBTQ population has been persecuted, forced into prison camps, and even murdered.

It’s estimated that more than 100 gay men have been detained by authorities.

LGBTQ groups suggest that highlighting the ways in which technology can be exploited to further target queer and trans people is a gift to someone like Chechen leader Ramzan Kadyrov. The strongman has further promised to “eliminate” the province’s LGBTQ population.

“Stanford should distance itself from such junk science,” Johnson concludes.

GLAAD’s Jim Halloran, whosigned onto a joint statement with HRCcriticizing the findings, also argues that the study was fallaciously conflating sexuality with the physical characteristics of a fraction of the LGBTQ community.

While he claims that technology cannot determine one’s orientation,” what the software can detect “is a pattern that found a small subset of out white gay and lesbian people on dating sites who look similar.”

Critics note that the study leaves out bisexual and trans individuals, as well as people of color.

Michal Kosinski and Yilun Wang, the Stanford researchers behind the study, have responded to the criticism by saying that they didn’t invent the software.

They just analyzed it.

“We did not build a privacy-invading tool,” say Wang and Kosinski, who write that the findings should be viewed as a warning. “We studied existing technologies, already widely used by companies and governments, to see whether they present a risk to the privacy of LGBTQ individuals. We were terrified to find that they do.”

The researchers say they debated whether their conclusions “should be made public at all.”

Following widespread backlash on social media to the study, editors at the Journal of Personality and Social Psychology tellThe Outlinethat it’s in the process being reevaluated for ethical considerations.

They claim the results would be made public in “some weeks.”

Help make sure LGBTQ+ stories are being told...

We can't rely on mainstream media to tell our stories. That's why we don't lock our articles behind a paywall. Will you support our mission with a contribution today?

Cancel anytime · Proudly LGBTQ+ owned and operated

Read More in Impact

The Latest on INTO

Subscribe to get a twice-weekly dose of queer news, updates, and insights from the INTO team.

in Your Inbox